-

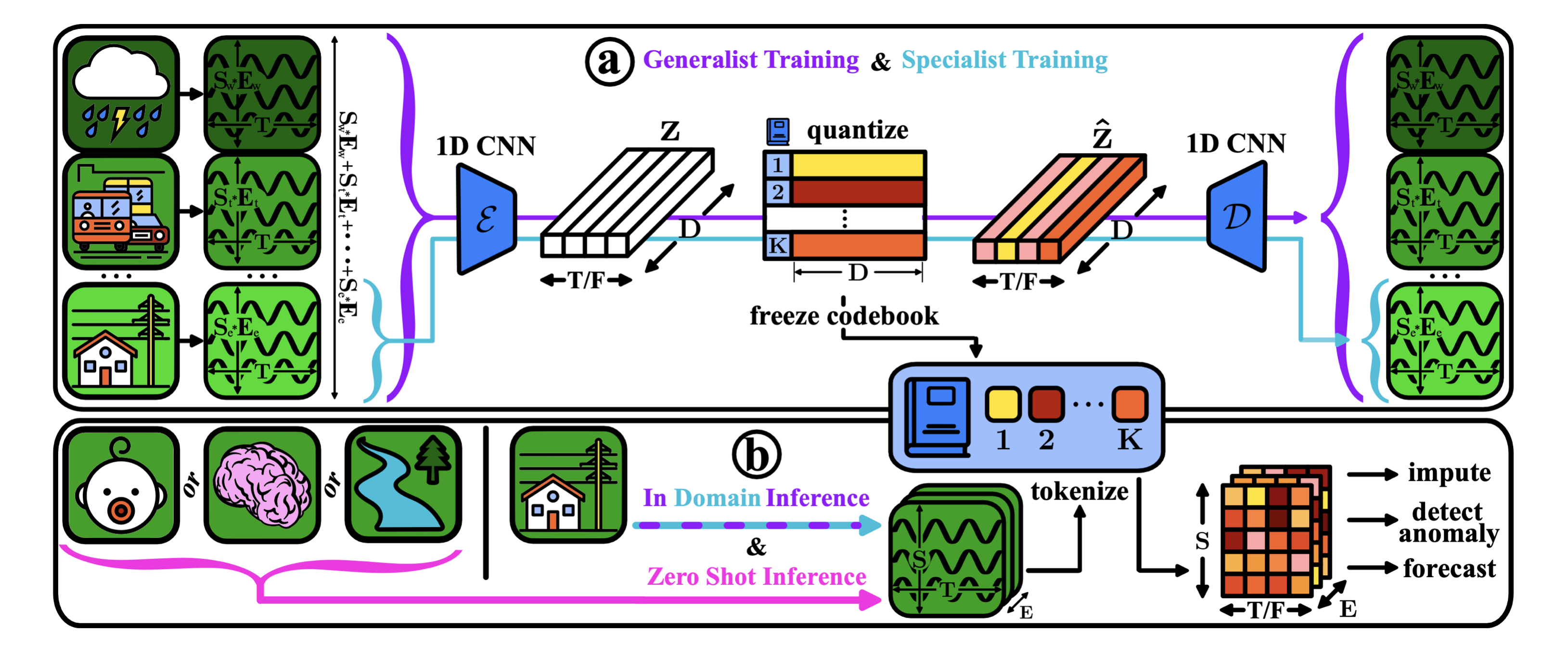

BOUQUET: Learning Large Vocabularies for Time Series and Images with Bernoulli Quantized Tokenization

To be released soon!

BOUQUET learns over 1 billion unique tokens that capture 15x more information, while enabling foundation models that train 6x faster, respond 12x quicker, and use 100x less memory.

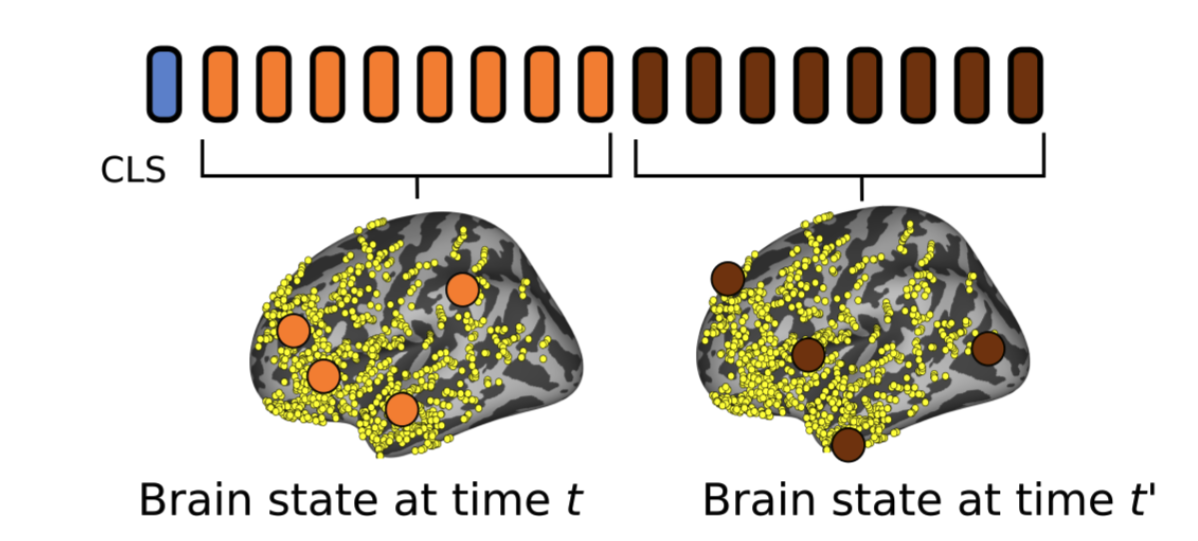

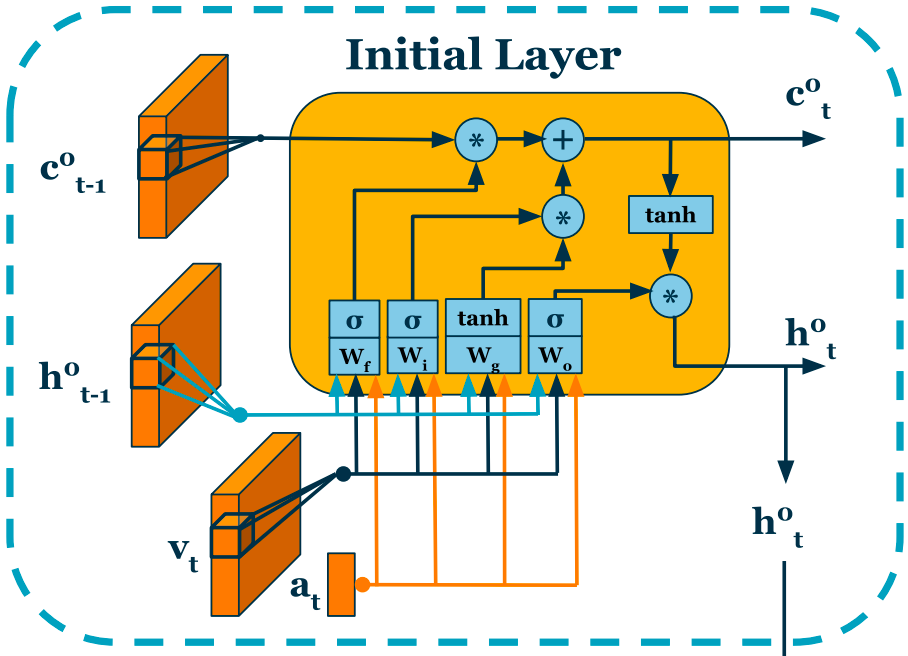

Population transformer: Learning population-level representations of neural activity

Oral Paper (Top 1.8% of Accepted Papers): [Paper] [Code]

Geeling Chau*, Christopher Wang*, Sabera Talukder, Vighnesh Subramaniam, Saraswati Soedarmadji, Yisong Yue, Boris Katz, Andrei Barbu

ICLR 2025

Our next-generation neuroscience foundation model overcomes sparse electrodes, human-to-human variation, and dataset discrepancies.

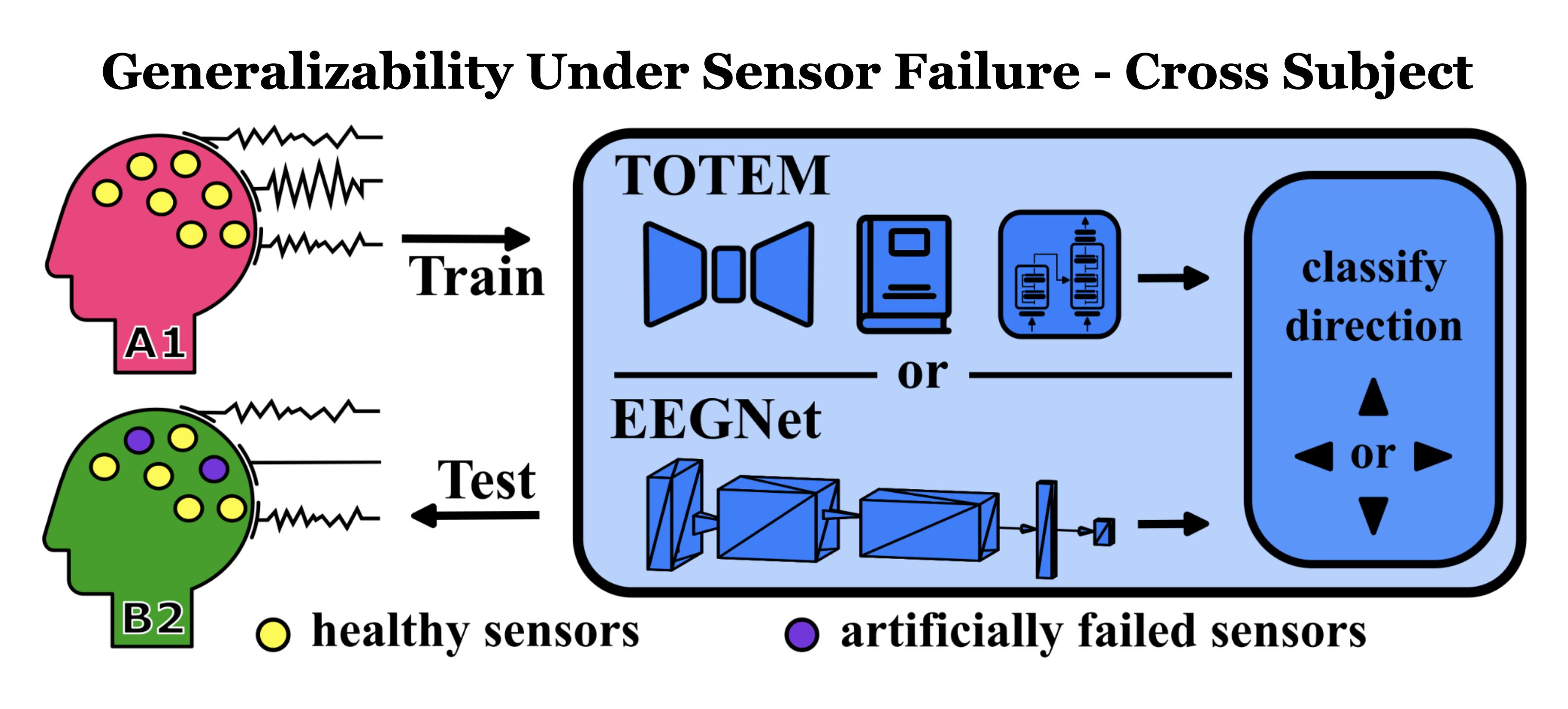

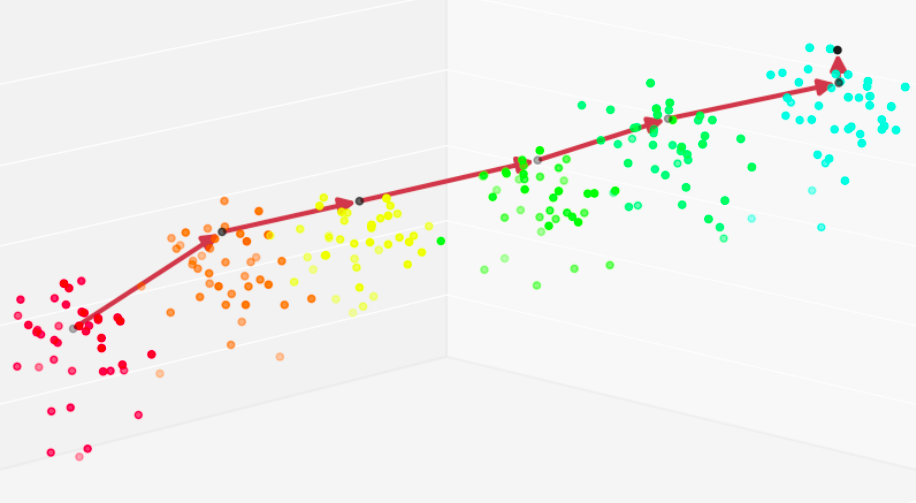

Generalizability Under Sensor Failure: Tokenization + Transformers Enable More Robust Latent Spaces

[Paper] [Code]

Geeling Chau*, Yujin An*, Ahamed Raffey Iqbal*, Soon-Jo Chung, Yisong Yue, Sabera Talukder

COSYNE 2024

We run human neural experiments on 4 subjects, capturing brain data while uncovering the most generalizable AI model.

-

-

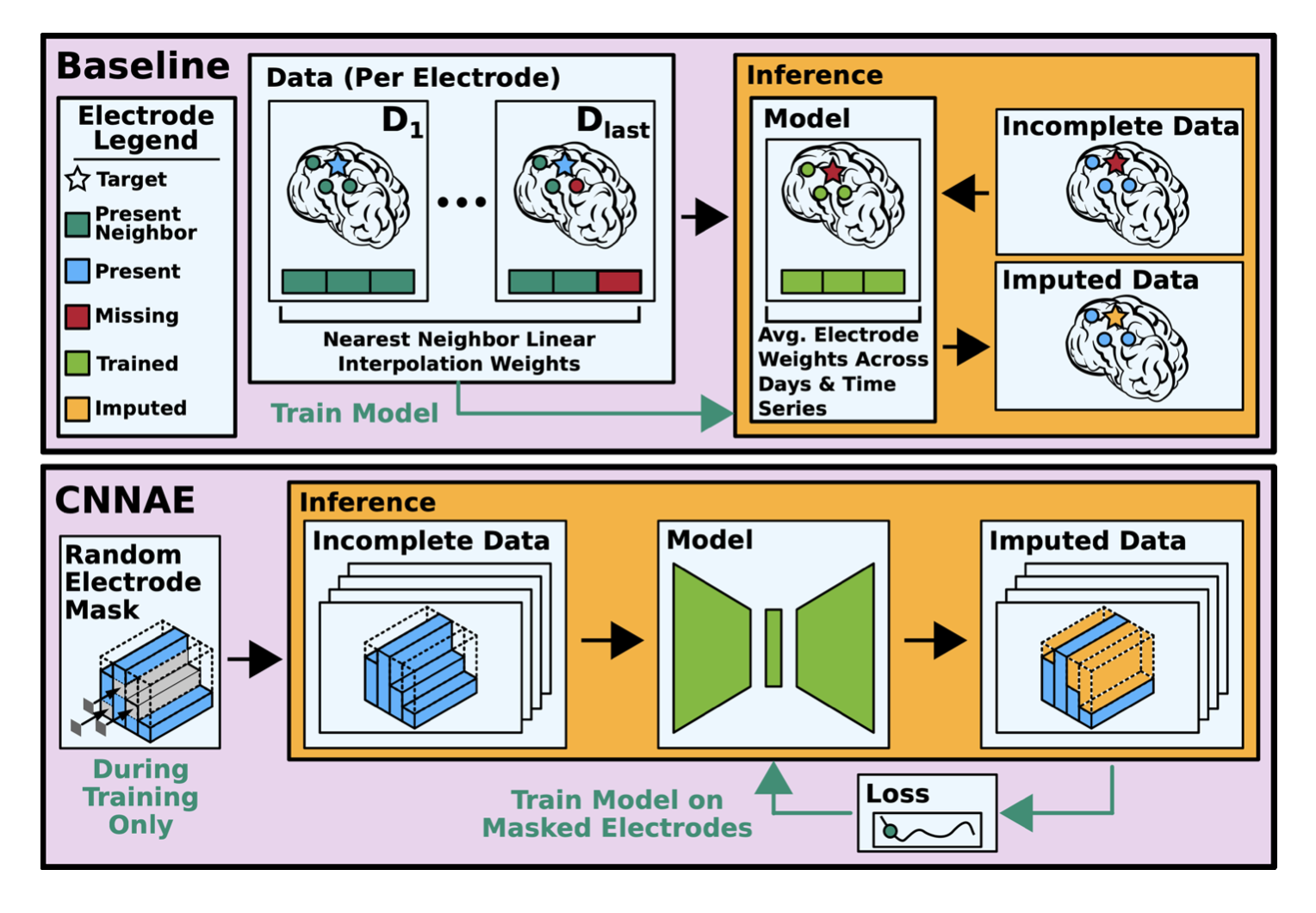

Deep Neural Imputation: A Framework for Recovering Incomplete Brain Recordings

[Paper]

Sabera Talukder*, Jennifer Sun*, Matthew Leonard, Bing Brunton, Yisong Yue

COSYNE 2023 | Learning from Time Series for Health Workshop, Neurips 2022

This is among the first neuroscience foundation models - trained on invasive neural recordings from 12 patients to recover lost brain signals.

-

On the Benefits of Early Fusion in Multimodal Representation Learning

[Paper]

George Barnum*, Sabera Talukder*, Yisong Yue

Shared Visual Representations in Human & Machine Intelligence Workshop, Neurips 2020

We show that early fusion of audio and visual signals yields noise-robust neural networks that mirror the brain’s own multi-sensory convergence.

-

Architecture Agnostic Neural Networks

Oral Paper: [Paper] [Video]

Sabera Talukder*, Guruprasad Raghavan*, Yisong Yue

BabyMind Workshop, Neurips 2020

Inspired by the brain's synaptic plasticity, we built an architecture manifold search algorithm that uncovers whole families of performant neural networks.

-

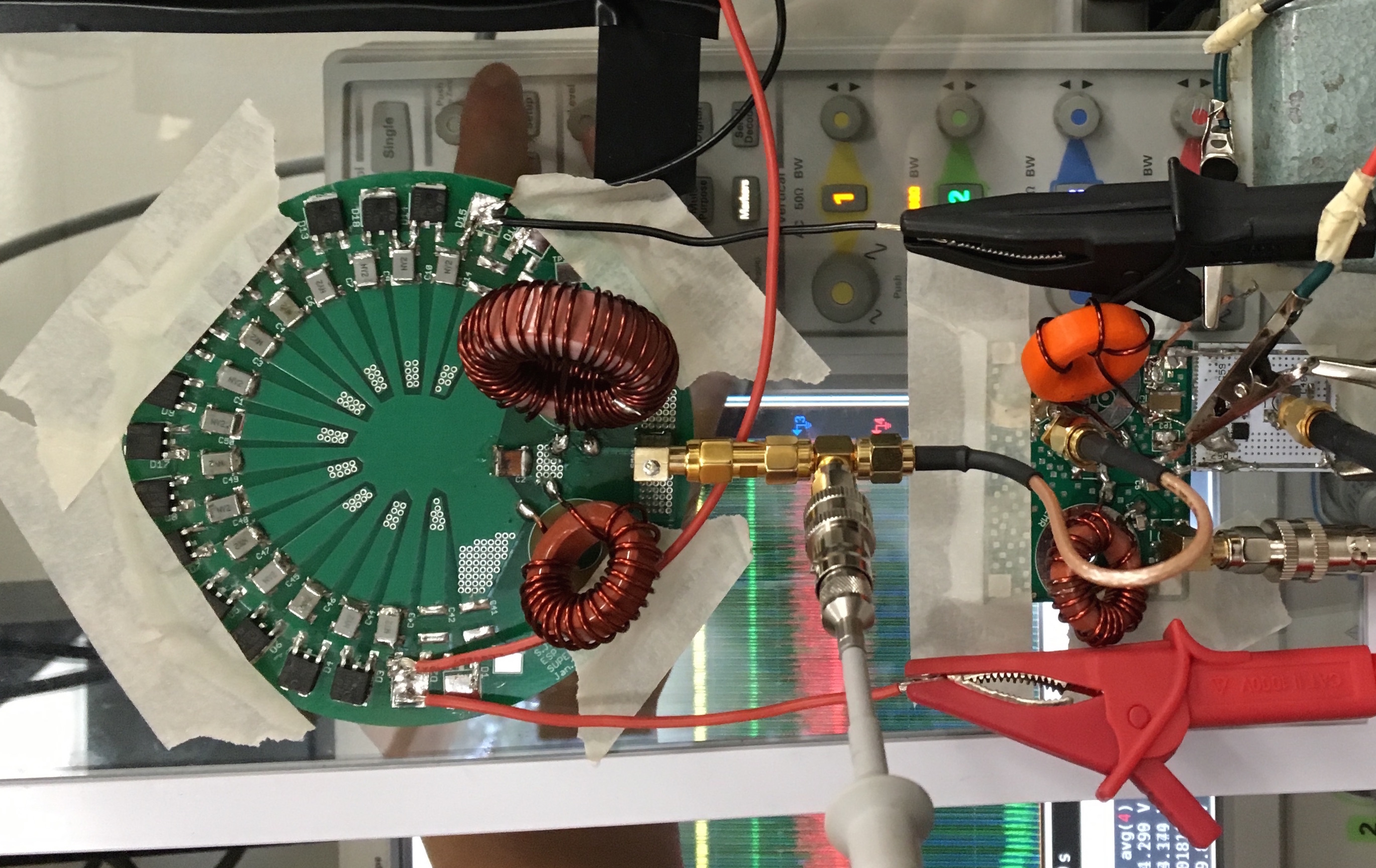

A Smoke Removing DC-DC Converter Composed of a Seven Stage Class DE Rectifier and a Class ϕ2 Inverter

[Honors Thesis]

Sabera Talukder

Electrical Engineering Honors Thesis, Stanford University, 2018

I introduce a miniaturized, portable, low power electrostatic precipitator that lowers indoor air pollution from rural cook stoves.

-

Exploring Visual Memory Formation in Drosophila melanogaster

[Honors Thesis]

Sabera Talukder

Biochemistry Honors Thesis, Stanford University, 2018

We created a 6-stimulus training suite across 4 rigs to test if fruit flies can form visual memories.

-

A Portable Electrostatic Precipitator to Reduce Respiratory Death in Rural Environments

Oral Paper: [Paper]

Sabera Talukder, Sanghyeon Park, Juan Rivas-Davila

IEEE Compel 2017

With 4.3 million lives lost each year to cooking smoke, we built a circuit that boosts voltage ~100x to remove harmful airborne particles.